Deploy HA cluster postgres-14(with timescaledb) with repmgr,consul,consul-template and haproxy.

All docker’s for this cluster already builed and i pushed repos with docker’s to github. Base docker for postgres – bitnami vendor.

System Requirements:

- docker swarm with master(minimal 1) and 3 worker nodes(worker-1,worker-2,worker-3)

Files:

- Dockers:

Deploy order:

- tune sysctl

- deploy consul server with persistent storage

- deploy postgres ha cluster with repmgr

- deploy haproxy for postgres cluster

- test connections

Tune sysctl

sysctl -w kern.sysv.shmmax=16777216

sysctl -w kern.sysv.shmall=16777216Make all steps(unless otherwise specified) from manager node(with shared files across cluster with network file system/storage like ceph or glustertfs)

Step 1. Deploy consul server

Create overlay network with name dev_network, to witch we bill attach our stacks.

docker network create --driver=overlay --attachable dev_networkmkdir -p /docker-compose/SWARM/services/consul/

cd /docker-compose/SWARM/services/consul/

mkdir -p data/consul

chmod 777 -R data/consulcat <<OEF> docker-compose.yml

version: '3.8'

services:

consul-prod:

image: "devsadds/consul:1.8.4"

hostname: "consul-prod-{{.Task.ID}}"

volumes:

- "./data/consul:/consul/data"

networks:

dev_network:

aliases:

- consul-prod.service.consul

command: "consul agent -config-file /consul/config/config.json"

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 13

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.consul-prod == true ]

networks:

dev_network:

driver: overlay

external: true

OEFCreate .env file

cat <<OEF> .env

DOCKER_SWARM_STACK_NAME=prod-consul

OEFCreate file deploy-to-swarm.sh

cat <<OEF> deploy-to-swarm.sh

#!/bin/bash

ENV_FILE=".env"

deploy_to_swarm() {

export \$(cat \${ENV_FILE}) &&

docker stack deploy -c docker-compose.yml \${DOCKER_SWARM_STACK_NAME} --prune --resolve-image always

}

deploy_to_swarm

OEFCreate file remove-to-swarm.sh

cat <<OEF> remove-to-swarm.sh

#!/bin/bash

ENV_FILE=".env"

remove_from_swarm() {

export \$(cat \${ENV_FILE}) && docker stack rm \${DOCKER_SWARM_STACK_NAME}

}

function clean_docker_system() {

docker system prune -f

docker network prune -f

}

remove_from_swarm

OEFchmod +x remove-to-swarm.sh deploy-to-swarm.sh

docker node update --label-add consul-prod=true worker-1

docker node update --label-add consul-prod=true worker-2

docker node update --label-add consul-prod=true worker-3And deploy

./deploy-to-swarm.shDeploy postgres ha cluster

Create dirs

mkdir -p /docker-compose/SWARM/databases/postgres12

cd /docker-compose/SWARM/databases/postgres12cat <<OEF> docker-compose.yml

version: '3.8'

services:

pg-1:

image: devsadds/postgresql-repmg-14.1.0:v1.2.0

hostname: pg-1-{{.Task.ID}}

environment:

POSTGRESQL_POSTGRES_PASSWORD: "phah7uutheePighphahdsdsdsd7uutheePighgfgfgf"

POSTGRESQL_USERNAME: "postgres"

POSTGRESQL_PASSWORD: "phah7uutheePighphahdsdsdsd7uutheePighgfgfgf"

POSTGRESQL_DATABASE: "startdb"

REPMGR_PASSWORD: "dsd324khlh47tkggdfMmdlhdlfdg"

REPMGR_PRIMARY_HOST: "pg-1"

REPMGR_PRIMARY_PORT: "5432"

REPMGR_PARTNER_NODES: "pg-1,pg-2,pg-3"

REPMGR_NODE_NAME: "pg-1"

REPMGR_NODE_NETWORK_NAME: "pg-1"

REPMGR_PORT_NUMBER: "5432"

POSTGRESQL_DATA_DIR: "/bitnami/postgresql/data"

SERVER_NAME: "convy"

DISCOVERY_SERVICE_HOST: "consul-prod"

CONSUL_SERVICE_NAME: "postgres-14-repmgr"

POSTGRESQL_SHARED_PRELOAD_LIBRARIES: "repmgr,pg_stat_statements"

POSTGRESQL_MAX_CONNECTIONS: "300"

REPMGR_NODE_PRIORITY: "100"

volumes:

- "pg-1_data:/bitnami/postgresql"

- type: tmpfs

target: /dev/shm

tmpfs:

size: 2048000000 # (this means 2GB)

networks:

dev_network:

stop_grace_period: 180s

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 1000

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.pg-1 == true ]

pg-2:

image: devsadds/postgresql-repmg-14.1.0:v1.2.0

hostname: pg-2-{{.Task.ID}}

environment:

POSTGRESQL_POSTGRES_PASSWORD: "phah7uutheePighphahdsdsdsd7uutheePighgfgfgf"

POSTGRESQL_USERNAME: "postgres"

POSTGRESQL_PASSWORD: "phah7uutheePighphahdsdsdsd7uutheePighgfgfgf"

POSTGRESQL_DATABASE: "startdb"

REPMGR_PASSWORD: "dsd324khlh47tkggdfMmdlhdlfdg"

REPMGR_PRIMARY_HOST: "pg-1"

REPMGR_PRIMARY_PORT: "5432"

REPMGR_PARTNER_NODES: "pg-1,pg-2,pg-3"

REPMGR_NODE_NAME: "pg-2"

REPMGR_NODE_NETWORK_NAME: "pg-2"

REPMGR_PORT_NUMBER: "5432"

POSTGRESQL_DATA_DIR: "/bitnami/postgresql/data"

SERVER_NAME: "convy"

DISCOVERY_SERVICE_HOST: "consul-prod"

CONSUL_SERVICE_NAME: "postgres-14-repmgr"

POSTGRESQL_SHARED_PRELOAD_LIBRARIES: "repmgr,pg_stat_statements"

POSTGRESQL_MAX_CONNECTIONS: "200"

REPMGR_NODE_PRIORITY: "90"

volumes:

- "pg-2_data:/bitnami/postgresql"

- type: tmpfs

target: /dev/shm

tmpfs:

size: 2048000000 # (this means 2GB)

networks:

dev_network:

stop_grace_period: 180s

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 1000

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.pg-2 == true ]

pg-3:

image: devsadds/postgresql-repmg-14.1.0:v1.2.0

hostname: pg-3-{{.Task.ID}}

environment:

POSTGRESQL_POSTGRES_PASSWORD: "phah7uutheePighphahdsdsdsd7uutheePighgfgfgf"

POSTGRESQL_USERNAME: "postgres"

POSTGRESQL_PASSWORD: "phah7uutheePighphahdsdsdsd7uutheePighgfgfgf"

POSTGRESQL_DATABASE: "startdb"

REPMGR_PASSWORD: "dsd324khlh47tkggdfMmdlhdlfdg"

REPMGR_PRIMARY_HOST: "pg-1"

REPMGR_PRIMARY_PORT: "5432"

REPMGR_PARTNER_NODES: "pg-1,pg-2,pg-3"

REPMGR_NODE_NAME: "pg-3"

REPMGR_NODE_NETWORK_NAME: "pg-3"

REPMGR_PORT_NUMBER: "5432"

POSTGRESQL_DATA_DIR: "/bitnami/postgresql/data"

SERVER_NAME: "convy"

DISCOVERY_SERVICE_HOST: "consul-prod"

CONSUL_SERVICE_NAME: "postgres-14-repmgr"

POSTGRESQL_SHARED_PRELOAD_LIBRARIES: "repmgr,pg_stat_statements"

POSTGRESQL_MAX_CONNECTIONS: "200"

REPMGR_NODE_PRIORITY: "80"

volumes:

- "pg-3_data:/bitnami/postgresql"

- type: tmpfs

target: /dev/shm

tmpfs:

size: 2048000000 # (this means 2GB)

networks:

dev_network:

stop_grace_period: 180s

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 1000

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.pg-3 == true ]

volumes:

pg-1_data:

pg-2_data:

pg-3_data:

networks:

dev_network:

driver: overlay

external: true

OEFCreate .env file

cat <<OEF> .env

DOCKER_SWARM_STACK_NAME=prod-postgres-14

OEFCreate file deploy-to-swarm.sh

cat <<OEF> deploy-to-swarm.sh

#!/bin/bash

ENV_FILE=".env"

deploy_to_swarm() {

export \$(cat \${ENV_FILE}) &&

docker stack deploy -c docker-compose.yml \${DOCKER_SWARM_STACK_NAME} --prune --resolve-image always

}

deploy_to_swarm

OEFCreate file remove-to-swarm.sh

cat <<OEF> remove-to-swarm.sh

#!/bin/bash

ENV_FILE=".env"

function remove_from_swarm() {

export \$(cat \${ENV_FILE}) && docker stack rm \${DOCKER_SWARM_STACK_NAME}

}

function clean_docker_system() {

docker system prune -f

docker network prune -f

}

remove_from_swarm

OEFchmod +x remove-to-swarm.sh deploy-to-swarm.shUpdate nodes labels

docker node update --label-add pg-1=true worker-1

docker node update --label-add pg-2=true worker-2

docker node update --label-add pg-3=true worker-3

And deploy

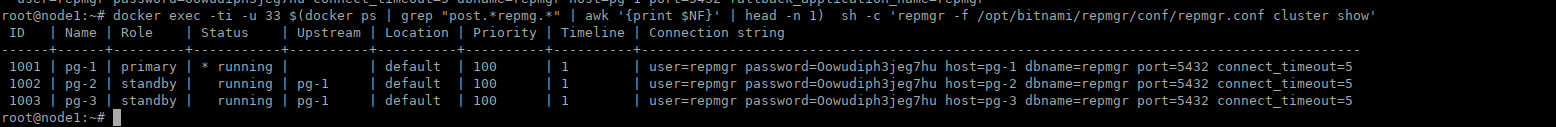

./deploy-to-swarm.shSee cluster status on one of postures nodes

docker exec -ti -u 33 $(docker ps | grep "post.*repmg.*" | awk '{print $NF}' | head -n 1) sh -c 'repmgr -f /opt/bitnami/repmgr/conf/repmgr.conf cluster show'During first start you will see errors in cluster state.

Fix it(in my case i scale nodes with errors to 0 replicas and rescale again to 1 replicas)

docker service scale prod-postgres-14_pg-1=0 -d && docker service scale prod-postgres-14_pg-3=0 -d && sleep 25 && docker service scale prod-postgres-14_pg-1=1 -d && docker service scale prod-postgres-14_pg-3=1 -d

And see status again

docker exec -ti -u 33 $(docker ps | grep "post.*repmg.*" | awk '{print $NF}' | head -n 1) sh -c 'repmgr -f /opt/bitnami/repmgr/conf/repmgr.conf cluster show'

It will be ok.

Deploy haproxy

Create dirs

mkdir -p /docker-compose/SWARM/services/haproxy-postgres/

cd /docker-compose/SWARM/services/haproxy-postgres/Create docker-compose

cat <<OEF> docker-compose.yml

version: "3.8"

x-logging:

&rsyslog-logging

driver: syslog

options:

syslog-address: "udp://127.0.0.1:515"

x-logging-json:

&json-logging

driver: json-file

options:

max-size: '100m'

max-file: '5'

x-deploy:

&haproxy-deploy

mode: global

#replicas: 2

restart_policy:

condition: on-failure

delay: 10s

max_attempts: 40

window: 10s

update_config:

parallelism: 1

delay: 5s

failure_action: rollback

monitor: 25s

max_failure_ratio: 0.3

order: stop-first

placement:

constraints: [ node.labels.haproxy-postgres == true ]

services:

prod-haproxy-postgres:

image: registry.gitlab.com/devops_containers/dockers/haproxy-postgres:2.5.1_consul_v1.0.0

ports:

- target: 5432

published: 5432

protocol: tcp

mode: host

- target: 5433

published: 5433

protocol: tcp

mode: host

- target: 80

published: 33381

protocol: tcp

mode: host

- target: 9101

published: 9101

protocol: tcp

mode: host

environment:

DOCKER_ROLE: "haproxy-postgres"

DISCOVERY_SERVICE_HOST: "consul-prod"

DISCOVERY_SERVICE_NAME: "postgres-14-repmgr"

HAPROXY_STATS_URL: "/"

HAPROXY_STATS_AUTH_USER: "devsaddsuser"

HAPROXY_STATS_AUTH_PASSWORD: "devsaddspassword"

HAPROXY_STATS_ALLOW_HOSTS: "192.168.56.0/24 10.0.0.0/8 172.0.0.0/8 127.0.0.1/32"

HAPROXY_SERVICE_ALLOW_HOSTS: "192.168.56.0/24 10.0.0.0/8 172.0.0.0/8 127.0.0.1/32"

SERVER_NAME: "haproxy-postgres"

HAPROXY_POSTGRES_BACKEND_MAX_CONN: "120"

HAPROXY_POSTGRES_CHECK_USER: "hpclustercheckuser"

DISCOVERY_SERVICE_NAME_POSTGRES_READER: "postgres-14-repmgr"

DISCOVERY_SERVICE_NAME_POSTGRES_WRITER: "postgres-14-repmgr"

networks:

dev_network:

deploy:

<<: *haproxy-deploy

networks:

dev_network:

driver: overlay

external: true

OEFCreate .env file

cat <<OEF> .env

DOCKER_SWARM_STACK_NAME=prod-haproxy-postgres

OEFCreate file deploy-to-swarm.sh

cat <<OEF> deploy-to-swarm.sh

#!/bin/bash

ENV_FILE=".env"

deploy_to_swarm() {

export \$(cat \${ENV_FILE}) &&

docker stack deploy -c docker-compose.yml \${DOCKER_SWARM_STACK_NAME} --prune --resolve-image always

}

deploy_to_swarm

OEFCreate file remove-to-swarm.sh

cat <<OEF> remove-to-swarm.sh

#!/bin/bash

ENV_FILE=".env"

remove_from_swarm() {

export \$(cat \${ENV_FILE}) && docker stack rm \${DOCKER_SWARM_STACK_NAME}

}

clean_docker_system() {

docker system prune -f

docker network prune -f

}

remove_from_swarm

OEFchmod +x remove-to-swarm.sh deploy-to-swarm.sh

Update nodes labels

docker node update --label-add haproxy-postgres=true worker-1

docker node update --label-add haproxy-postgres=true worker-2

docker node update --label-add haproxy-postgres=true worker-3And deploy

./deploy-to-swarm.shCreate user for haproxy check on new database cluster(from container master server).

postgresrootcreate user hpclustercheckuser;

ALTER ROLE hpclustercheckuser WITH LOGIN;

ALTER USER hpclustercheckuser WITH PASSWORD 'devsaddspassword';

quitSnippets

Create databases in cluster with scripts from postgres master container

/bin/bash /pgscripts/02-create-db.sh "devsaddsuser" "devsaddspassword" "devsaddsdbname"Alter created database with user

/bin/bash /pgscripts/03-change-db-owner.sh "devsaddsuser" "devsaddsdbname"Backup scripts

download script there

Usage script:

./backup_db.sh --backup_root_dir=/docker-compose/bup --db_backup_enable_mysql=false --db_backup_enable_postgres=true --db_stack_postgres=docker --db_stack_mysql=docker --days_to_store_backup=15 --pg_day_for_vacuum=6 --rocket_chat_url="http://127.0.0.1"backup all databases from postgresql container with 15 days store and vaccum analise on 6 day ow week

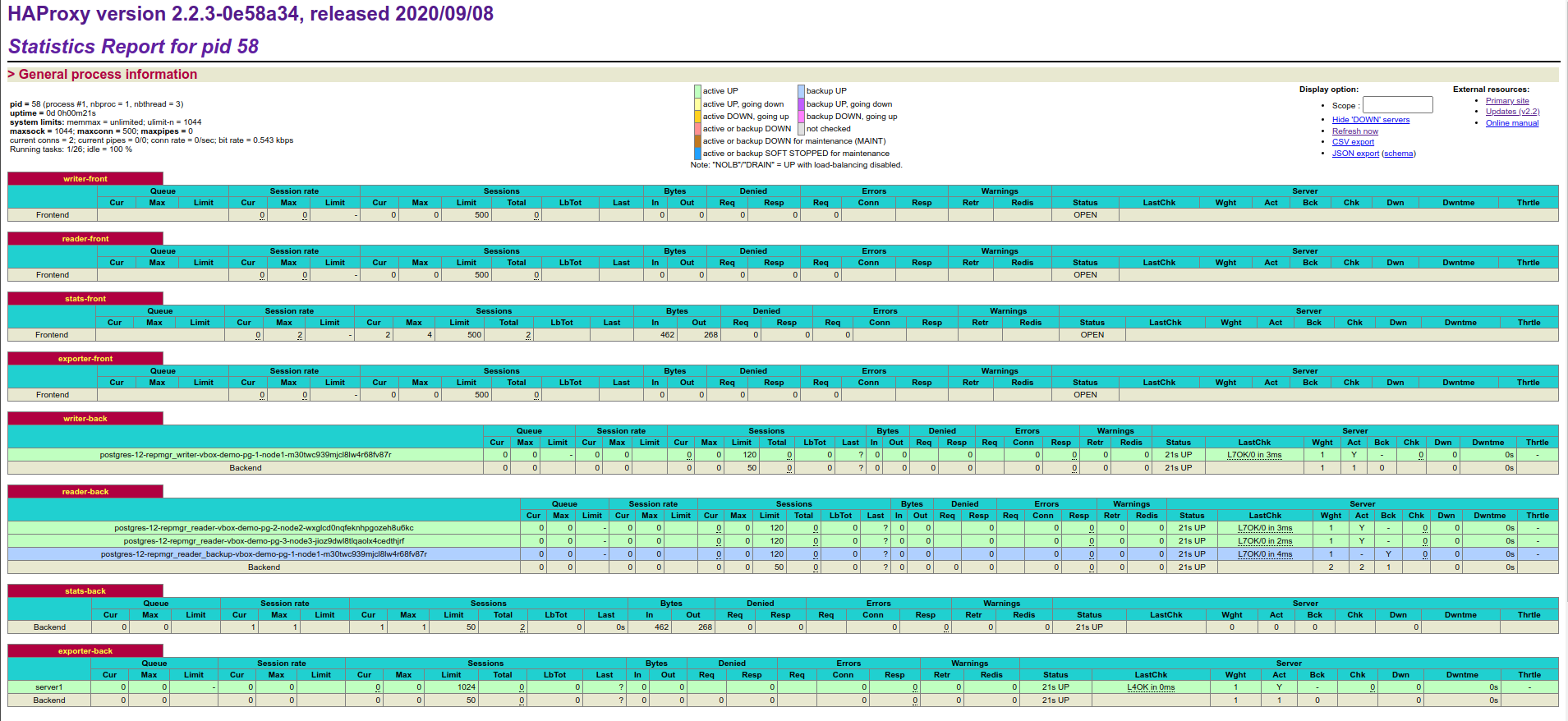

See haproxy statistics

Open ip with worker node and port 33381, example on my host http://192.168.56.103:33381/