Depend on other snippets,let’s create percona xtradb cluster 8.0 with consul in docker swarm cluster

Create percona xtradb cluster in docker swarm cluster.

REQUIREMENTS

- 3 nodes swarm workers(node1,node2,node3)

- 1 node swarm manager(node4)

Ip’s v4 nodes

node1 - swarm worker - 192.168.1.108

node2 - swarm worker - 192.168.1.109

node3 - swarm worker - 192.168.1.110

node4 - swarm manager - 192.168.1.111PREPARE

Add label to swarm nodes

docker node update --label-add pxc=true node1

docker node update --label-add pxc=true node2

docker node update --label-add pxc=true node3

docker node update --label-add consul=true node4

Set heartbeat period

docker swarm update --dispatcher-heartbeat 20sMake directories

mkdir -p /docker-compose/SWARM/pxc8/configs/consulCreate config file consul server

vim /docker-compose/SWARM/pxc8/configs/consul/config.json

{

"advertise_addr" : "{{ GetInterfaceIP \"eth0\" }}",

"bind_addr": "{{ GetInterfaceIP \"eth0\" }}",

"addresses" : {

"http" : "0.0.0.0"

},

"ports" : {

"server": 8300,

"http": 8500,

"dns": 8600

},

"skip_leave_on_interrupt": true,

"server_name" : "pxc.service.consul",

"primary_datacenter":"dc1",

"acl_default_policy":"allow",

"acl_down_policy":"extend-cache",

"datacenter":"dc1",

"data_dir":"/consul/data",

"bootstrap": true,

"server":true,

"ui" : true

}Create docker compose file

vim /docker-compose/SWARM/pxc8/docker-compose.yml

version: '3.6'

services:

consul:

image: "devsadds/consul:1.8.3"

hostname: consul

volumes:

- "/docker-compose/SWARM/pxc8/configs/consul:/consul/config"

ports:

- target: 8500

published: 8500

protocol: tcp

mode: host

networks:

pxc8-net:

aliases:

- pxc.service.consul

command: "consul agent -config-file /consul/config/config.json"

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 13

window: 18s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.consul == true ]

pxc:

image: "devsadds/pxc:8.0.19-10.1-consul-1.8.3-focal-v1.1.0"

environment:

CLUSTER_NAME: "percona"

MYSQL_ROOT_PASSWORD: "root32456"

MYSQL_PROXY_USER: "mysqlproxyuser"

MYSQL_PROXY_PASSWORD: "mysqlproxy32456"

PXC_SERVICE: "pxc.service.consul"

DISCOVERY_SERVICE: "consul"

DATADIR: "/var/lib/mysql"

MONITOR_PASSWORD: "mys3232323323"

XTRABACKUP_PASSWORD: "mys3232323323"

volumes:

- "pxc_8_0:/var/lib/mysql"

networks:

pxc8-net:

aliases:

- mysql

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 23

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.pxc == true ]

volumes:

pxc_8_0:

networks:

pxc8-net:

driver: overlay

ipam:

driver: default

config:

- subnet: 10.23.0.0/24

Deploy stack

cd /docker-compose/SWARM/pxc8

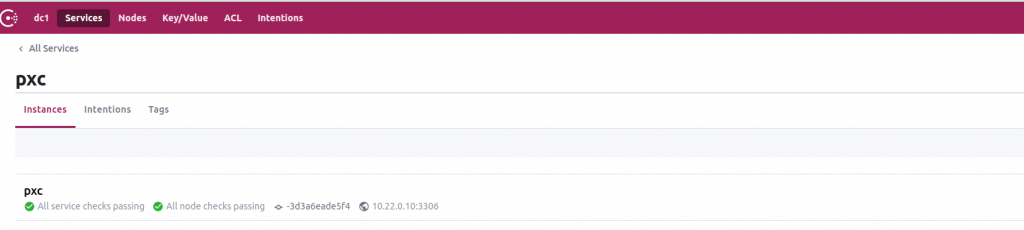

docker stack deploy -c docker-compose.yml pxc8 --resolve-image always --prune --with-registry-auth Go to web ui(unsecured)

http://192.168.1.111:8500/ui/dc1/services/pxc8/instances

Wait until first node in cluster ok.

Then scale to 3 nodes

docker service scale pxc8_pxc=3 -d Wait until cluster scale.

Scale cluster to one node

docker service scale pxc8_pxc=1 -dCluster with one node become non-Primary and not ready for operations

SHOW GLOBAL STATUS LIKE 'wsrep_cluster_status';

+----------------------+---------+

| Variable_name | Value |

+----------------------+---------+

| wsrep_cluster_status | non-Primary |

+----------------------+---------+After scale to one node, exec command on the last node with pxc(Most Advanced Node) make cluster Primary from non-Primary state

SET GLOBAL wsrep_provider_options='pc.bootstrap=YES';The node now operates as the starting node in a new Primary Component.

SHOW GLOBAL STATUS LIKE 'wsrep_cluster_status';

+----------------------+---------+

| Variable_name | Value |

+----------------------+---------+

| wsrep_cluster_status | Primary |

+----------------------+---------+Now we can scale our cluster to 3 or more nodes

docker service scale pxc8_pxc=3 -d Fix cluster after crash all nodes

Edit file and set safe_to_bootstrap to 1 on node with latest data.

cd /docker-compose/SWARM/pxc8

docker stack rm pxc8Edit file /var/lib/docker/volumes/pxc8_pxc_8_0/_data/gvwstate.dat

nano /var/lib/docker/volumes/pxc8_pxc_8_0/_data/gvwstate.dat my_uuid: 505b00f5-f33a-11ea-9ee4-abd76ca92272

#vwbeg

view_id: 3 505b00f5-f33a-11ea-9ee4-abd76ca92272 52

bootstrap: 0

member: 505b00f5-f33a-11ea-9ee4-abd76ca92272 0

member: 88f6da78-f1fc-11ea-81cc-e386dd9bf4d3 0

member: 91aaf3ec-f33a-11ea-88f8-93c04e238f30 0

#vwendAnd make changes

my_uuid: 505b00f5-f33a-11ea-9ee4-abd76ca92272

#vwbeg

view_id: 3 505b00f5-f33a-11ea-9ee4-abd76ca92272 52

bootstrap: 0

member: 505b00f5-f33a-11ea-9ee4-abd76ca92272 0

#vwend

Edit file /var/lib/docker/volumes/pxc8_pxc_8_0/_data/grastate.dat

nano /var/lib/docker/volumes/pxc8_pxc_8_0/_data/grastate.datsafe_to_bootstrap 1and stark cluster with one node again

cd /docker-compose/SWARM/pxc8

docker stack deploy -c docker-compose.yml pxc8 --resolve-image always --prune --with-registry-auth Reconnect pxc nodes to consul docker, if consul docker restarted

Go into container with pxc

ps aux | grep consulkill consul agent

kill -9 13and run process again in foregroud, with last command, added at the end of line

/bin/consul agent -retry-join consul -client 0.0.0.0 -bind 10.22.0.17 -node -99f341353c95 -data-dir /tmp -config-file /tmp/pxc.json &