Percona xtradb cluster 5.7 with haproxy,consul and supervisord in docker swarm cluster

Create percona xtradb cluster in docker swarm cluster. Proccesses in pxc docker run with supervisord, nodes pxc stored in consul server.

Haproxy create with consul template auto update haproxy config.

REQUIREMENTS

- 3 nodes swarm workers(node1,node2,node3)

- 1 node swarm manager(node4)

Ip’s v4 nodes

node1 - swarm worker - 192.168.1.108

node2 - swarm worker - 192.168.1.109

node3 - swarm worker - 192.168.1.110

node4 - swarm manager - 192.168.1.111PREPARE

Add label to swarm nodes

docker node update --label-add pxc1=true node1

docker node update --label-add pxc2=true node2

docker node update --label-add pxc3=true node3

docker node update --label-add consul=true node4

Set heartbeat period

docker swarm update --dispatcher-heartbeat 20sMake directories

mkdir -p /docker-compose/SWARM/databases/pxc5_7

Create docker compose file

vim /docker-compose/SWARM/databases/pxc5_7/docker-compose.yml

version: '3.6'

services:

consul-prod:

image: "devsadds/consul:1.9.3-v.1.0.8"

hostname: consul-prod-{{.Node.Hostname}}-{{.Task.ID}}

ports:

- target: 8500

published: 8600

protocol: tcp

mode: host

volumes:

- consul-data:/consul/data

environment:

CONSUL_SERVER_NAME: "pxc.service.consul"

CONSUL_SERVER_NAME_ENCRYPT_TOKEN: ""

CONSUL_SERVER_LOG_LEVEL: "warn"

CONSUL_CLEANUP_DEAD_SERVERS: "true"

CONSUL_SERVER_DATACENTER: "dc1"

CONSUL_SERVER_CLUSTER_SIZE: "3"

CONSUL_SERVER_NETWORK_REJOIN_HOST: "consul"

CONSUL_SERVER_INTERFACE_BIND: "eth0"

networks:

network_dev_dev-network:

aliases:

- consul-prod.service.consul

- prod-consul

- pxc.service.consul

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 13

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.consul == true ]

pxc-5-7-1:

image: devsadds/pxc:5.7.32-35-57-consul-1.9.3-bionic-v2.3.3

hostname: pxc-5-7-1-{{.Node.Hostname}}-{{.Task.ID}}

environment:

CLUSTER_NAME: "pxc-cluster"

MYSQL_ROOT_PASSWORD: "root32456"

PXC_SERVICE: "pxc.service.consul"

DISCOVERY_SERVICE: "consul-prod"

CONSUL_SERVICE: "pxc"

MYSQL_TUNE_BEFORE_START: "true"

XTRABACKUP_PASSWORD: "xtrabackuproot32456"

EXPORTER_PASSWORD: "3syvjKuA84pb6T8gcBF7t3VCkPfGVwq"

###MYSQL TUNE

INNODB_LOG_FILES_IN_GROUP: "2"

INNODB_LOG_FILE_SIZE: "128M"

INNODBFLUSH_LOG_AT_TRX_COMMIT: "2"

INNODB_FILE_PER_TABLE: "1"

INNODB_BUFFER_POOL_SIZE: "2G"

INNODB_AUTOINC_LOCK_MODE: "2"

MAX_ALLOWED_PACKET: "128M"

MAX_CONNECT_ERRORS: "1000000"

MAX_CONNECTIONS: "300"

WSREP_SLAVE_THREADS: "2"

WAIT_TIMEOUT: "200"

TABLE_OPEN_CACHE: "4096"

MAX_HEAD_TABLE_SIZE: "64M"

TMP_TABLE_SIZE: "64M"

BINLOG_FOMAT: "ROW"

###MYSQL REPLICATION MASTER

GTID_REPLICATION: "true"

REPLICATION_ROLE: "master"

EXPIRE_LOGS_DAYS: "10"

MAX_BINLOG_SIZE: "1000M"

INNODB_LOCKS_UNSAFE_FOR_BINLOG: "1"

CONSUL_AGENT_SLEEP_TIME: "20"

#CLUSTER_JOIN: "true"

volumes:

- "pxc_5_7_1:/var/lib/mysql"

#entrypoint: tail -f /dev/null

networks:

network_dev_dev-network:

tty: true

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 1000

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.pxc1 == true ]

pxc-5-7-2:

image: devsadds/pxc:5.7.32-35-57-consul-1.9.3-bionic-v2.3.3

hostname: pxc-5-7-2-{{.Node.Hostname}}-{{.Task.ID}}

environment:

CLUSTER_NAME: "pxc-cluster"

MYSQL_ROOT_PASSWORD: "root32456"

PXC_SERVICE: "pxc.service.consul"

DISCOVERY_SERVICE: "consul-prod"

CONSUL_SERVICE: "pxc"

MYSQL_TUNE_BEFORE_START: "true"

XTRABACKUP_PASSWORD: "xtrabackuproot32456"

EXPORTER_PASSWORD: "3syvjKuA84pb6T8gcBF7t3VCkPfGVwq"

###MYSQL TUNE

INNODB_LOG_FILES_IN_GROUP: "2"

INNODB_LOG_FILE_SIZE: "128M"

INNODBFLUSH_LOG_AT_TRX_COMMIT: "2"

INNODB_FILE_PER_TABLE: "1"

INNODB_BUFFER_POOL_SIZE: "2G"

INNODB_AUTOINC_LOCK_MODE: "2"

MAX_ALLOWED_PACKET: "128M"

MAX_CONNECT_ERRORS: "1000000"

MAX_CONNECTIONS: "300"

WSREP_SLAVE_THREADS: "2"

WAIT_TIMEOUT: "200"

TABLE_OPEN_CACHE: "4096"

MAX_HEAD_TABLE_SIZE: "64M"

TMP_TABLE_SIZE: "64M"

BINLOG_FOMAT: "ROW"

###MYSQL REPLICATION MASTER

GTID_REPLICATION: "true"

REPLICATION_ROLE: "master"

EXPIRE_LOGS_DAYS: "10"

MAX_BINLOG_SIZE: "1000M"

INNODB_LOCKS_UNSAFE_FOR_BINLOG: "1"

CONSUL_AGENT_SLEEP_TIME: "100"

CLUSTER_JOIN: "true"

volumes:

- "pxc_5_7_2:/var/lib/mysql"

networks:

network_dev_dev-network:

#entrypoint: tail -f /dev/null

tty: true

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 1000

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.pxc2 == true ]

pxc-5-7-3:

image: devsadds/pxc:5.7.32-35-57-consul-1.9.3-bionic-v2.3.3

hostname: pxc-5-7-3-{{.Node.Hostname}}-{{.Task.ID}}

environment:

CLUSTER_NAME: "pxc-cluster"

MYSQL_ROOT_PASSWORD: "root32456"

PXC_SERVICE: "pxc.service.consul"

DISCOVERY_SERVICE: "consul-prod"

CONSUL_SERVICE: "pxc"

MYSQL_TUNE_BEFORE_START: "true"

XTRABACKUP_PASSWORD: "xtrabackuproot32456"

EXPORTER_PASSWORD: "3syvjKuA84pb6T8gcBF7t3VCkPfGVwq"

###MYSQL TUNE

INNODB_LOG_FILES_IN_GROUP: "2"

INNODB_LOG_FILE_SIZE: "128M"

INNODBFLUSH_LOG_AT_TRX_COMMIT: "2"

INNODB_FILE_PER_TABLE: "1"

INNODB_BUFFER_POOL_SIZE: "2G"

INNODB_AUTOINC_LOCK_MODE: "2"

MAX_ALLOWED_PACKET: "128M"

MAX_CONNECT_ERRORS: "1000000"

MAX_CONNECTIONS: "300"

WSREP_SLAVE_THREADS: "2"

WAIT_TIMEOUT: "200"

TABLE_OPEN_CACHE: "4096"

MAX_HEAD_TABLE_SIZE: "64M"

TMP_TABLE_SIZE: "64M"

BINLOG_FOMAT: "ROW"

###MYSQL REPLICATION MASTER

GTID_REPLICATION: "true"

REPLICATION_ROLE: "master"

EXPIRE_LOGS_DAYS: "10"

MAX_BINLOG_SIZE: "1000M"

INNODB_LOCKS_UNSAFE_FOR_BINLOG: "1"

CONSUL_AGENT_SLEEP_TIME: "160"

CLUSTER_JOIN: "true"

volumes:

- "pxc_5_7_3:/var/lib/mysql"

networks:

network_dev_dev-network:

#entrypoint: tail -f /dev/null

tty: true

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

delay: 15s

max_attempts: 1000

window: 180s

update_config:

parallelism: 1

delay: 20s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

placement:

constraints: [ node.labels.pxc3 == true ]

volumes:

pxc_5_7_1:

pxc_5_7_2:

pxc_5_7_3:

consul-data:

networks:

pxc-net:

driver: overlay

ipam:

driver: default

config:

- subnet: 10.22.0.0/16

CONSUL_AGENT_SLEEP_TIME – is pause between start entrypoint in docker * 2. 20=40 – for coerrent init order.

Create network for connection

docker network create -d overlay network_dev_dev-networkDeploy stack

cd /docker-compose/SWARM/databases/pxc5_7

docker stack deploy -c docker-compose.yml pxc --resolve-image always --prune --with-registry-auth Wait until cluster init.

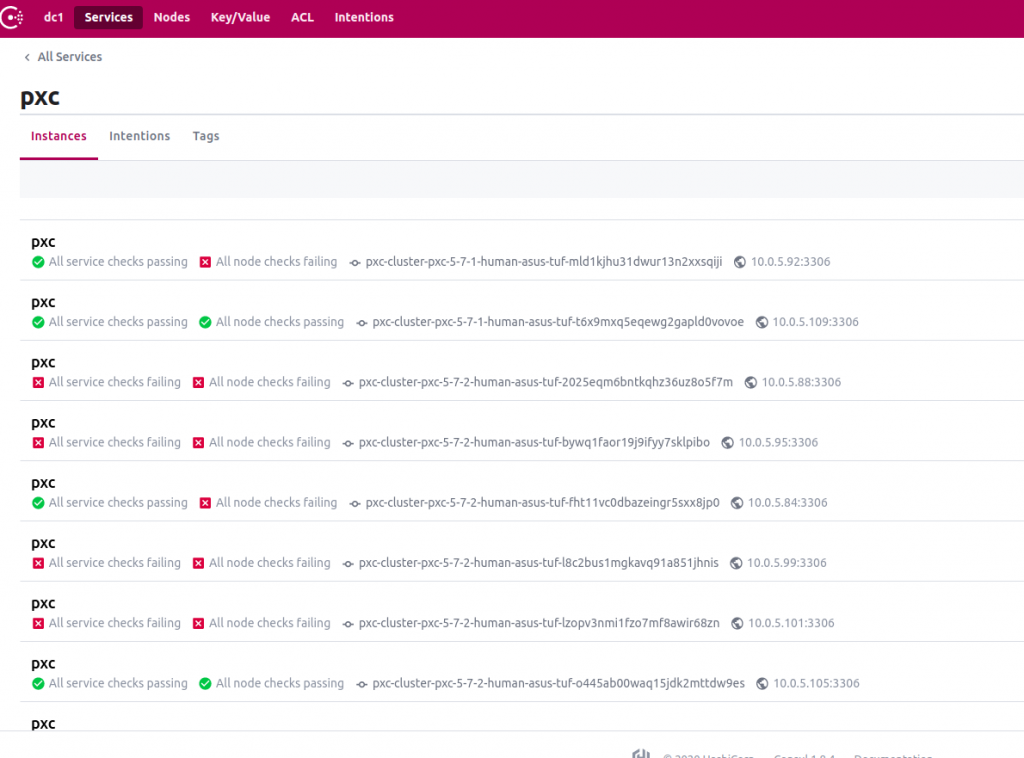

Go to web ui(unsecured)

http://192.168.1.111:8600/ui/dc1/services/pxc/instances

and see all nodes done.

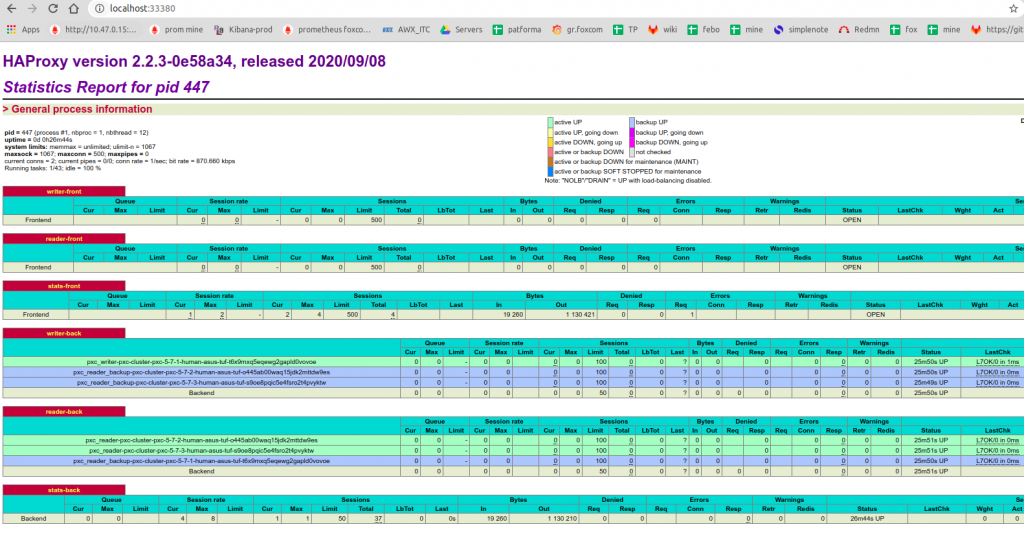

Deploy haproxy cluster for pxc cluster – one node become on port 3306, other node become on port 3307.

mkdir -p /docker-compose/SWARM/services/haproxy-mysql/

Create docker-compose.yml

version: "3.8"

x-logging:

&rsyslog-logging

driver: syslog

options:

syslog-address: "udp://127.0.0.1:515"

x-logging-json:

&json-logging

driver: json-file

options:

max-size: '100m'

max-file: '5'

x-deploy:

&haproxy-deploy

mode: global

#replicas: 1

restart_policy:

condition: on-failure

delay: 10s

max_attempts: 40

window: 10s

update_config:

parallelism: 1

delay: 5s

failure_action: rollback

monitor: 25s

max_failure_ratio: 0.3

order: stop-first

placement:

constraints: [ node.labels.haproxy == true ]

services:

haproxy-mysql:

image: devsadds/haproxy-mysql:2.3.5_consul-1.9.3_consul-template-0.25.1-v1.2.2

ports:

- target: 3306

published: 3306

protocol: tcp

mode: host

- target: 3307

published: 3307

protocol: tcp

mode: host

- target: 80

published: 33380

protocol: tcp

mode: host

- target: 19101

published: 19102

protocol: tcp

mode: host

environment:

DOCKER_ROLE: "haproxy"

DISCOVERY_SERVICE: "consul"

DISCOVERY_SERVICE_HOST: "consul-prod"

DISCOVERY_SERVICE_NAME: "pxc"

HAPROXY_STATS_URL: "/"

HAPROXY_STATS_AUTH_USER: "human"

HAPROXY_STATS_AUTH_PASSWORD: "323232323"

HAPROXY_STATS_ALLOW_HOSTS: "192.168.56.0/24 10.0.0.0/8 172.16.0.0/12127.0.0.1/32"

HAPROXY_SERVICE_ALLOW_HOSTS: "192.168.56.0/24 10.0.0.0/8 172.16.0.0/12127.0.0.1/32"

SERVER_NAME: "haproxy"

HAPROXY_MYSQL_CHECK_USER: "hpclustercheckuser"

HAPROXY_MYSQL_BACKEND_MAX_CONN: "200"

networks:

network_dev_dev-network:

aliases:

- haproxy-mysql

deploy:

<<: *haproxy-deploy

networks:

network_dev_dev-network:

driver: overlay

external: true

HAPROXY_STATS_ALLOW_HOSTS: allow hosts for webui

HAPROXY_SERVICE_ALLOW_HOSTS: allowed host for connection to haproxy

Add label for haproxy deploy

docker node update --label-add haproxy=true node1 && docker node update --label-add haproxy=true node4Deploy haproxy

export DOCKER_SWARM_STACK_NAME="haproxy-mysql"

docker stack deploy -c docker-compose.yml ${DOCKER_SWARM_STACK_NAME} --resolve-image always --prune

And create user($HAPROXY_MYSQL_CHECK_USER) for haproxy check in percona cluster.

CREATE USER 'hpclustercheckuser'@'%' IDENTIFIED BY '';

GRANT PROCESS ON *.* TO 'hpclustercheckuser'@'%';Go to webui

And see all nodes ok, if

Fix cluster after crash all nodes

Edit file and set safe_to_bootstrap to 1 on node with latest data.

cd /docker-compose/SWARM/databases/pxc5_7

docker stack rm pxcnano /var/lib/docker/volumes/pxc_5_7_1/_data/grastate.datsafe_to_bootstrap 1and stark cluster with one node again

cd /docker-compose/SWARM/databases/pxc5_7uncoomment in docker-compose.yml

CLUSTER_JOIN: "True"If error during sst from second node – exec on first node in mysql

SET GLOBAL wsrep_provider_options='pc.bootstrap=YES';for skip init empty cluster after start and simple start with last data current.

Deploy cluster

docker stack deploy -c docker-compose.yml pxc --resolve-image always --prune --with-registry-auth Reconnect pxc nodes to consul docker, if consul docker restarted

Go into container with pxc

ps aux | grep consulkill consul agent

kill -9 13and run process again in foregroud, with last command, added at the end of line

/bin/consul agent -retry-join consul -client 0.0.0.0 -bind 10.22.0.17 -node -99f341353c95 -data-dir /tmp -config-file /tmp/pxc.json &